Blog Archives

Introducing the new Magic Quadrant for Public Cloud IaaS

I’m happy to announce that the new Gartner Magic Quadrant for Public Cloud Infrastructure as a Service has been published. (Client-only link. Non-clients can read a reprint.)

This is a brand-new Magic Quadrant; our previous Magic Quadrant has essentially been split into two MQs, this new Public Cloud IaaS MQ that focuses on self-service, and an updated and more focused iteration of the previous MQ, focused on managed services, called the Managed Hosting and Cloud IaaS MQ.

It’s been a long and interesting and sometimes controversial journey. Threaded throughout this whole Magic Quadrant are the fundamental dichotomies of the market, like IT Operations vs. developer buyers, new applications vs. existing workloads, “virtualization plus” vs. the fundamental move towards programmatic infrastructure, and so forth. We’ve tried hard to focus on a pragmatic view of the immediate wants and needs of Gartner clients, which also reflect these dichotomies.

This is a Magic Quadrant unlike the ones we have historically done in our services research; it is focused upon capabilities and features, in a manner that is much more comparable to the way that we compare software companies, than it is to things like network services or managed hosting or data center outsourcing. This reflects that public cloud IaaS goes far beyond just self-service VMs, creating significant disparities in provider capabilities.

In fact, for this Magic Quadrant, we tried just about every provider hands-on, which is highly unusual for Gartner’s evaluation approach. However, because Gartner’s general philosophy isn’t to do the kind of lab evaluations that we consider to be the domain of journalists, the hands-on stuff was primarily to confirm that providers had particular features and the specifics of what they had, without having to constantly pepper them with questions. Consequently this also involved in reading a lot of documentation, community forums, etc. This wasn’t full-fledged serious trialing. (The expense of the trials was paid on my personal credit card. Fortunately, since this was the cloud, it amounted to less than $150 all told.)

However, like all Magic Quadrants, there’s a heavy emphasis on business factors and not just technology — we are evaluating the positions of companies in the market, which are a composite of many things not directly related to comparable functionality of the services.

Like other Magic Quadrants, this one is targeted at the typical Gartner client — a mid-market company or an enterprise, but also our many tech company clients who range from tiny start-ups to huge monoliths. We believe that cloud IaaS, including the public cloud, is being used to run not only new applications, but also existing workloads. We don’t believe that public cloud IaaS is only for apps written specifically for the cloud, and we certainly don’t believe that it’s only for start-ups or leading-edge companies. It’s a nascent market, yes, but companies can use it productively today as long as they’re thoughtful about their use cases and deployment approach. We also don’t believe that cloud IaaS is solely the province of mass-scale providers; multi-tenancy can be cost-effectively delivered on a relatively small scale, as long as most of the workloads are steady-state (which legacy workloads often are).

Service features, sales, and marketing are all impacted by the need to serve two different buying constituencies, IT Operations and developers. Because we believe that developers are the face of business buyers, though, we believe that addressing this audience is just as important as it is addressing the traditional IT Operations audience. We do, however, emphasize a fundamentally corporate audience — this is definitely not an MQ aimed at, say, an individual building an iPhone app, or even non-technology small businesses.

Nowhere are those dichotomies better illustrated than two of the Leaders in this MQ — Amazon Web Services and CSC. Amazon excels at addressing a developer audience and new applications; CSC excels at addressing a mid-market IT Operations audience on the path towards data center transformation and automation of IT operations management, by migrating to cloud IaaS. Both companies address audiences and use cases beyond that expertise, of course, but they have enormously different visions of their fundamental value proposition, that are both valid. (For those of you who are going, “CSC? Really?” — yes, really. And they’ve been quietly growing far faster than any other VMware-based provider, so for all you vendors out there, if they’re not on your competitive radar screen, they should be.)

Of course, this means that no single provider in the Magic Quadrant is a fantastic fit for all needs. Furthermore, the right provider is always dependent upon not just the actual technical needs, but also the business needs and corporate culture, like the way that the company likes to engage with its vendors, its appetite for risk, and its viewpoint on strategic vs. tactical vendors.

Gartner has asked its analysts not to debate published research in public (per our updated Public Web Participation policy), especially Magic Quadrants. Consequently, I’m willing to engage in a certain amount of conversation about this MQ in public, but I’m not going to get into the kinds of public debates that I got into last year.

If you have questions about the MQ or are looking for more detail than is in the text itself, I’m happy to discuss. If you’re a Gartner client, please schedule an inquiry. If you’re a journalist, please arrange a call through Gartner’s press office. Depending on the circumstances, I may also consider a discussion in email.

This was a fascinating Magic Quadrant to research and write, and within the limits of that “no public debates” restriction, I may end up blogging more about it in the future. Also, as this is a fast-moving market, we’re highly likely to target an update for the middle of next year.

Five reasons you should work at Gartner with me

Gartner is hiring again! We’ve got a number of open positions, actually, and somewhat flexible about how we use the headcount; we’re looking for great people and the jobs can adapt to some extent based on what they know. This also means we’re flexible on seniority level — anywhere from about five years of experience to “I have been in the industry forever” is fine. We’re very flexible on background, too; as long as you have a solid grasp of technology, with an understanding of business, we don’t care if you’re currently an engineer, IT manager, product manager, marketing person, journalist, etc.

First and foremost, we’re looking for an analyst to cover the colocation market, and preferably also data center leasing. Someone who knows one or more other adjacent spaces as well would be great — peering, IP transit, hosting, cloud IaaS, content delivery networks, network services, etc.

We could also use an analyst who can cover some of the things that I cover — cloud IaaS, managed hosting, CDNs, and general Internet topics (managed DNS, domain registration, peering, and so on).

These positions will primarily serve North American clients, but we don’t care where you’re located as long as you can accomodate normal US time zones; these positions are work-from-home.

I love my job. You’ve got to have the right set of personality traits to enjoy it, but if the following five things sound awesome to you, you should come work at Gartner:

1. It is an unbeatably interesting job for people who thrive on input. You will spend your days talking to IT people from an incredibly diverse array of businesses around the globe, who all have different stories to tell about their environments and needs. Vendors will tell you about the cool stuff that they’re doing. You will be encouraged to inhale as much information as you can, reading and researching on your own. You will have one-on-one meetings with hundreds of clients each year (our busiest analysts do over 1,500 one-on-one interactions!), and get to meet countless more in informal interactions. Many of the people you talk to will make you smarter, and all of them will make you more knowledgeable.

2. You get to help people in bite-sized chunks. People will tell you their problems and you will try your best to help them in thirty minutes. After those thirty minutes, their problem is no longer yours; they’re the ones who are going to have to go back and fight through their politics and tangled snarl of systems to get things done. It’s hugely satisfying if you enjoy that kind of thing, especially since you do often get long-term feedback about how much you helped them. You’ll help IT buyer clients choose the right strategy, pick the right vendors, and save tons of money by smart contract negotiation. You’ll help vendors with their strategy, design better products, understand the competition, and serve their customers better. You’ll help investors understand markets and companies and trends, which translates directly into helping them make money. Hopefully, you’ll get to influence the market in a way that’s good for everyone.

3. You get to work with great colleagues. Analysts here are smart and self-motivated. There’s no real hierarchy; we work collaboratively and as equals, regardless of our titles, with ad-hoc leadership as needed. Also, analysts are articulate, witty, and opinionated, which always makes for fun interactions. Your colleagues will routinely provide you with new insights, challenge your thinking, and provide amazing amounts of expertise in all kinds of things. There’s almost always someone who is deeply expert in whatever you want to talk about. Analysts are Gartner’s real product; research and events are a result of the people. Our turnover is extremely low.

4. Your work is self-directed. Nobody tells you what to do here beyond some general priorities and goals; there’s very little management. You’re expected to figure out what you need to do with some guidance from your manager and input from your peers, manage your time accordingly, and go do it. You mostly get to figure out how to cover your market, and aim towards what clients are interested in. Your research agenda and coverage are flexible, and you can expand into whatever you can be expert in. You set your own working hours. Most people work from home.

5. We don’t do any pay-for-play. Integrity is a core value at Gartner, so you won’t be selling your soul. About 80% of our revenue comes from IT buyers, not vendors. Unlike most other analyst firms, we don’t do commissioned white papers, or anything else that could be perceived as an endorsement of a vendor; also, unlike some other analyst firms, analysts don’t have any sales responsibility for bringing in vendor sales or consulting engagements, or being quoted in press releases, etc. You will neither need to know nor care which vendors are clients or what they’re paying (any vendor can do briefings, though only clients get inquiry). Analysts must be unbiased, and management fiercely defends your right to write and say anything you want, as long as it’s backed up by solid evidence and is presented professionally, no matter how upset it makes a vendor. (Important downside: We don’t allow side work like participation in expert nets, and we don’t allow you or your immediate family to have any financial interest in the areas you cover, including employment or stock ownership in related companies. If your spouse works in tech, this can be a serious limiter.)

Poke me if you’re interested. I have a keen interest in seeing great people hired into these roles fast, since they’re going to be taking a big chunk of my current workload.

Managed Hosting and Cloud IaaS Magic Quadrant

We’re wrapping up our Public Cloud IaaS Magic Quadrant (the drafts will be going out for review today or tomorrow), and we’ve just formally initiated the Managed Hosting and Cloud IaaS Magic Quadrant. This new Magic Quadrant is the next update of last year’s Magic Quadrant for Cloud Infrastructure as a Service and Web Hosting.

Last year’s MQ mixed both managed hosting (whether on physical servers, multi-tenant virtualized “utility hosting” platforms, or cloud IaaS) as well as the various self-service cloud IaaS use cases. While it presented an overall market view, the diversity of the represented use cases meant that it was difficult to use the MQ for vendor selection.

Consequently, we added the Public Cloud IaaS MQ (covering self-service cloud IaaS), and retitled the old MQ to “Managed Hosting and Cloud IaaS” (covering managed hosting and managed cloud IaaS). They are going to be two dramatically different-looking MQs, with a very different vendor population.

The Managed Hosting and Cloud IaaS MQ covers:

- Managed hosting on physical servers

- Managed hosting on a utility hosting platform

- Managed hosting on cloud IaaS

- Managed hybrid hosting (blended delivery models)

- Managed Cloud IaaS (at minimum, guest OS is provider-managed)

Both portions of the market are important now, and will continue to be important in the future, and we hope that having two Magic Quadrants will provide better clarity.

Results of Symposium workshop on Amazon

I promised the attendees at my Gartner Symposium workshop, called “Using Amazon Web Services“, that I would post the notes from the session, so here they are — with some context for public consumption.

A workshop is a structured, facilitated discussions that are designed to assist participants in working through a problem, coming up with best practices, etc. This one had thirty people, all from IT organizations that were either using Amazon or planning to use Amazon.

Because I didn’t know what level of experience with Amazon the workshop attendees would have, I actually prepared two workshops in advance. One of them was a highly structured work-through of preparing to use Amazon in a more formal way (i.e., not a single developer with a credit card or the like), and the other was a facilitated sharing of challenges and best practices amongst current adopters. As the room skewed heavily towards people who already had a deployment well under way, this workshop focused on the latter.

I started the workshop with introductions — people, companies, current use cases. Then, I asked attendees to share their use cases in more details in their smaller working groups. This turned into a set of active discussions that I allowed extra time for, before I asked each of the group to make a list of their most significant challenges in adopting/using Amazon, and their solutions if any. Throughout, I circulated the room, listening and, rarely, commenting. Each group then shared their findings, and I offered some commentary and then did an open Q&A (with some more participant sharing of their answers to questions).

Broadly, I would say that we had three types of people in the room. We had folks from the public sector and education, who were at a relatively early stage in adoption; we had people who were test/dev oriented but in a significant way (i.e., formal adoption, not a handful of developers doing their thang); and we had people who were more e-business oriented (including people from net-native businesses like SaaS, as well as traditional businesses with a hosting type of need), although that could be test/dev or production. Most of the people were mid-level IT management with direct responsibility for the Amazon services.

Some key observations:

Dealing with the financial aspects of moving to the cloud is hard. Understanding the return on investment, accurately estimating costs in advance, comparing costs to internal costs, and understanding the details of billing were common challenges of the participants. Moreover, it raises the issue of “is capital king or is expense king?” Although the broader industry is constantly talking about how people are trying to move to expense rather than capital, workshop participants frequently asserted that it was easier for them to get capital than to up their recurring expenses. (As a side note, I have found that to be a frequent assertion in both inquiry and conference 1-on-1s.) Finally, user management, cost control, and turning resources on/off appropriately were problematic in the financial context.

Move low-risk workloads first. The workshop participants generally assessed Amazon as being suitable only to test/dev, non-mission-critical workloads, and things that had specifically been designed with Amazon’s characteristics in mind. Participants recommended a risk profile of apps, and moving low-risk apps first. They also saw their security organizations as being a barrier to adoption. Many had issues with their Legal departments either trying to prevent use of services or causing issues in the contracting process (what Amazon calls an Enterprise Agreement); participants recommended not involving Legal until after adopting the service.

Performance is a problem. Performance was cited as a frequent issue, especially storage performance, which participants noted was unsuitable to their production applications, and one participant made the key point that many test/dev situations also require highly performant storage (something he had first discovered when his ILM strategy placed test/dev storage at a lower more commodity tier and it impacted his developers).

Know what your SLA isn’t. Amazon’s limited SLAs were cited as an issue, particularly the mismatch in what many users thought the SLA was versus what it actually was, and what it’s actually turned out to be in practice (given Amazon’s outages this year). Participants also stressed business continuity planning in this context.

Integration is a challenge. Participants noted that going to test/dev in the cloud, while maintaining production in an internal data center, splits the software development lifecycle across data centers. This can be overcome to some degree with the appropriate tools, but still creates challenges and sometimes outright problems. Also, because speed of deployment is such a driving factor to go to the cloud, there is a resulting fragmentation of solutions. A service catalog would help some of these issues.

Data management can be a challenge. Participants were worried about regulatory compliance and the “where is my data?” question. Inexperienced participants were often not aware that non-S3 data is generally local to an availability zone. But even beyond that, there’s the question of what data is being put where by the cloud users. Participants with larger amounts of data also faced challenges in moving data in and out of the cloud.

Amazon isn’t the right provider for all workloads in the cloud. Several workshop participants used other cloud IaaS providers in addition to Amazon, for a variety of other reasons — greater ease of use for users who didn’t need complex things, enterprise-grade availability and performance, better manageability, security capabilities, and so forth.

I have conducted cloud workshops and what Gartner calls analyst/user roundtables at a bunch of our conferences now, and it’s always interesting what the different audiences think about, and how much it’s evolving over time. Compared to last year’s Symposium, the state of the art of Amazon adoption amongst conference attendees has clearly advanced hugely.

Gartner Symposium this week

I am at Gartner Symposium in Orlando this week, and would happy to meet and greet anyone who feels like doing so.

I am conducting a workshop on Thursday, at 11 am in Salon 7 in Yacht and Beach, called “Using Amazon Web Services“. (The workshop is full, but it’s always possible there may be no-shows if you’re trying to get in.) This workshop is targeted at attendees who are currently AWS customers, or who are currently evaluating AWS.

Gartner Invest clients, I’ll be at the Monday night event, and willing to chatter about anything (CDNs, especially Akamai, seem to be the hot topic, but I’m getting a fair chunk of questions about Rackspace and Equinix).

I hope to blog about some trends on my one-on-one interactions and other conversations at the conference, as we go through the week.

What does the future of the data center look like to you?

Earlier this year, I was part of a team at Gartner that took a futuristic view of the data center, in a scenario-planning exercise. The results of that work have been published as The Future of the Data Center Market: Four Scenarios for Strategic Planning (Gartner clients only). My blog entries today are by my colleague, project leader Joe Skorupa, and provide a glimpse into this research. See the introduction for more information.

The Scenarios

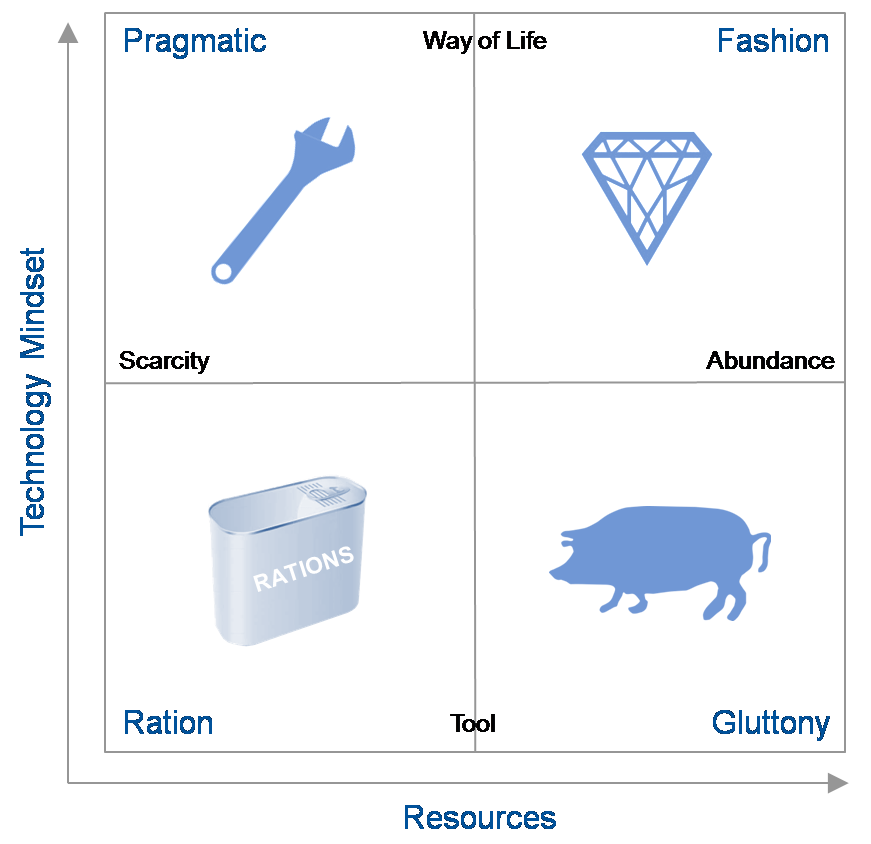

Scenarios are defined by the 4 quadrants that result from the intersection of the axes of uncertainty. In defining our scenarios we deliberately did not choose technology-related axes because they were too limiting and because larger macro forces were potentially more disruptive.

We focused on exploring how the different external factors outlined by the two axes would affect the environment into which companies would provide the products and services. Note that these external macro forces do contain technological elements.

The vertical axis describes the role and relevance of technology in the minds of the consumers and providers of technology while the horizontal axis describes availability of resources – human capital (workers with the right skill set), financial capital (investments in hardware, software, facilities or internal development) or natural resources, particularly energy — to provide IT. The resulting quadrants describe widely divergent possible futures.

- The “Tech Ration” Scenario

- This scenario describes the world in 2021 that is characterized by severely limited economic, energy, skill and technological resources needed to get the job done. People view technology as they used to think of the telephone – as a tool for a given purpose. After a decade of economic decline, wars, increasingly scarce resources and protectionist government reactions, most businesses are survival-focused.

Key Question: What would be the impact of a closed-down, localized view of the world on your strategic plans?

- The “Tech Pragmatic” Scenario

- This scenario presents a similar world of limited resources but where people are highly engaged with IT and it forms a key role in their lifestyles. Social networks and communities evolved over the decade into sources of innovation, application development and services. IT plays a major role in coordinating and orchestrating the ever-changing landscape of technology and services.

Key Question: Will your strategy be able to cope with a world of limited resources but the need for agility to meet user demands?

- The “Tech Fashion” Scenario

- This scenario continues the theme where the digital natives’ perspectives have evolved to where technology is an integral part of people’s lives. The decade preceding 2021 saw a social-media-led peace, a return to economic growth, and a flourishing of technology from citizen innovators. It is a world of largely unconstrained resources and limited government. Businesses rely on technology to maximize their opportunities. However, consumers demand the latest technology and expect it to be effective.

Key Question: How will a future where the typical IT consumer owns multiple devices and expects to access any application from every one of their devices affect your strategic planning?

- The “Tech Gluttony” Scenario

- This scenario continues in 2021 with unconstrained resources where people view technology as providing separate tools for a given purpose. Organizations developed situation-specific products and applications. Users and consumers view their technology tools as limited life one-offs. IT budgets become focused on integrating a constantly shifting landscape of tools.

Key Question: Does a world of excessive numbers of technological tools from myriad suppliers change your strategic planning?

The four scenario stories each depicts the journey to and a description of a plausible 2021 world. Of course the real future is likely to be a blend of two or more of the scenarions. To gain maximum value, you should treat each story as a history and description of the world as it is. To gain maximum benefit suspend disbelief, immerse yourself in the story, and take time to reflect on the implications for your business and enter into discussion on what plans would be most beneficial as the future unfolds.

ObPlug: Of course, Gartner analysts are available to assist in deriving specific implications for your business and formulating appropriate plans.

Introduction to the Future of the Data Center Market

Earlier this year, I was part of a team at Gartner that took a futuristic view of the data center, in a scenario-planning exercise. The results of that work have been published as The Future of the Data Center Market: Four Scenarios for Strategic Planning (Gartner clients only). My blog entries today are by my colleague, project leader Joe Skorupa, and provide a glimpse into this research.

Introduction

As a data center focused provider, how do you formulate strategic plans when the pace and breadth of change makes the future increasingly uncertain? Historical trends and incremental extrapolations may provide guidance for the next few years, but these approaches rarely account for disruptive change. Many Gartner clients that sell into the data center requested help formulating long-range strategic plans that embrace uncertainty. To assist our clients, a team of 15 Gartner from across a wide range of IT disciplines employed the scenario-based planning process to develop research about the future of the data center market. Unlike typical Gartner research, we did not focus on 12-18 month actionable advice; we focused on potential market developments/disruptions in the 2016-2021 timeframe. As a result its primary audience is C-level executives that their staffs that are responsible for long-term strategic planning. Product line managers and competitive analysts may also find this work useful.

Scenario-based planning was adopted by the US Department of Defense in the 1960s and the formal scenario-based planning framework was developed at Royal Dutch Shell in the 1970s. It has been applied to many organizations, from government entities to private companies, around the world to identify major disruptors that could impact an organization’s ability to maintain or gain competitive advantage. For this effort we used the process to identify and assess major changes in social, technological, economic, environmental and political (STEEP) environments.

These scenarios are told as stories and are not meant to be predictive and the actual future will be some subset of one or more of the stories. However, they provide a basis for deriving company-specific implications and developing a strategy to enable your company to move forward and adapt to uncertainty as the future unfolds. Exploring alternative future scenarios that are created by such major changes should lead to the discovery of potential opportunities in the market or to ensure the viability of current business models that may be critical to meeting future challenges.

To anchor the research, we focused on the following question (the Focal Issue) and its corollary:

Focal Issue: With rapidly changing end-user IT/services needs and requirements, what will be the role of the data center in 2021 and how will this affect my company’s competitiveness?

Corollary: How will the role of the data center affect the companies that sell products or services into this market?

The next post describes the scenarios themselves.

Recent research notes

This is just a quick call-out to draw your attention to the research that I’ve published recently.

- Do You Have a Business Case for a Top-Level Domain?

- I blogged previously on this topic, and this research note, done with my colleague Ray Valdes (whose coverage includes online user experience), dives deeply into consideration of the uses of gTLDs, the impact of gTLDs, the shifting landscape of how users find websites, and other things of interest to anyone considering a gTLD or preparing a business case for one.

- How to Deliver Video to Dispersed Users Without Upgrading Your Network

- Many organizations that are trying to deliver video to a lot of users think that they should use a traditional CDN. That’s not necessarily the right solution. This research note examines the range of solutions, divided by the delivery targets — Internet users outside your organization, your own employees at remote sites, Internet VPN users, and mixed-usage scenarios.

- How to Accelerate Internet Websites and Applications

- There are a range of techniques that can be used for acceleration — netwok optimization, front-end optimization (sometimes called Web content optimization or Web performance optimization), and caching — that can be delivered as appliances or services. This research note looks at selecting the right solution, and combining solutions, to maximize performance within your available budget.

(These notes are for Gartner clients only, sorry.)

Cloud IaaS coverage at Gartner

I’ve got a pair of new European colleagues, and I thought I’d take a moment to introduce, on my blog, the folks who cover public cloud infrastructure as a service here at Gartner, and to answer a common question about the way we cover the space here.

There are three groups of analysts here at Gartner who cover cloud IaaS, who belong to three different teams. Those teams are our Infrastructure and Operations (I&O) team, which is part of the division that offers advice to technology buyers (what Gartner calls “end-user organizations”) in the traditional Gartner client base of IT managers; our High-Tech and Telecom Provider (“HTTP”) division, which offers advice to vendors and investors along with end-users, and also produces quantitative market data such as forecasts and market statistics; and our IT1 division (formerly our Burton Group acquisition), which offers advice to technology implementors, generally IT architects and senior engineers in end-user organizations.

We all collaborate with one another, but these distinctions matter for anyone buying research from us. If you’re just buying what Gartner calls Core Research, you’ll have access to what the I&O analysts publish, along with anything that HTTP analysts publish into Core. To get access to HTTP-specific content, though, you’ll need to buy an upgrade, usually in the form of a Gartner for Business Laeders (GBL) research seat. The IT1 resesarch is sold separately; anything that IT1 analysts write (that’s not co-authored with analysts in other groups) goes solely to IT1 subscribers. The I&O analysts and HTTP analysts are available via inquiry by anyone who buys Gartner research, but the IT1 analysts are only inquiry-accessible by those who buy IT1 research specifically. You can, however, brief any of us — client status doesn’t matter for briefings.

So, we’re:

- Lydia Leong (HTTP, North America) – Cloud IaaS, Web hosting and colocation, content delivery networks, cloud computing and Internet infrastructure in general.

- Ted Chamberlin (I&O, North America) – Web and app hosting, colocation, cloud IaaS, network services (voice, data, and Internet).

- Drue Reeves (IT1, North America) – Data centers and cloud infrastructure, both internal and external.

- Kyle Hilgendorf (IT1, North America) – Data centers and cloud infrastructure, both internal and external.

- Tiny Haynes (I&O, Europe) – Web and app hosting, colocation, cloud IaaS, carrier services.

- Gregor Petri (HTTP, Europe) – Cloud IaaS, Web hosting and colocation, carrier services.

- Chee-Eng To (HTTP, Asia) – Carrier services in Asia, including cloud IaaS.

- Vincent Fu (HTTP, China) – Carrier services in China, including cloud IaaS.

Tiny Haynes and Gregor Petri are brand-new to Gartner, and they’ll be deepening our coverage of Europe as well as contributing to global research.

The forthcoming Public Cloud IaaS Magic Quadrant

Despite having made various blog posts and corresponded with a lot of people in email, there is persistent, ongoing confusion about our forthcoming Magic Quadrant for Public Cloud Infrastructure as a Service, which I will attempt to clear up here on my blog so I have a reference that I can point people to.

1. This is a new Magic Quadrant. We are doing this MQ in addition to, and not instead of, the Magic Quadrant for Cloud IaaS and Web Hosting (henceforth the “cloud/hosting MQ”). The cloud/hosting MQ will continue to be published at the end of each calendar year. This new MQ (henceforth the “public cloud MQ”) will be published in the middle of the year, annually. In other words, there will be two MQs each year. The two MQs will have entirely different qualification and evaluation criteria.

2. This new public cloud MQ covers a subset of the market covered by the existing cloud/hosting MQ. Please consult my cloud IaaS market segmentation to understand the segments covered. The existing MQ covers the traditional Web hosting market (with an emphasis on complex managed hosting), along with all eight of the cloud IaaS market segments, and it covers both public and private cloud. This new MQ covers multi-tenant clouds, and it has a strong emphasis on automated services, with a focus on the scale-out cloud hosting, virtual lab environment, self-managed virtual data center, and turnkey virtual data center segments. The existing MQ weights managed services very highly; by contrast, the new MQ emphasizes automation and self-service.

3. This is cloud compute IaaS only. This doesn’t rate cloud storage providers, PaaS providers, or anything else. IaaS in this case refers to the customer being able to have access to a normal guest OS. (It does not include, for instance, Microsoft Azure’s VM role.)

4. When we say “public cloud”, we mean massive multi-tenancy. That means that the service provider operates, in his data center, a pool of virtualized compute capacity in which multiple arbitrary customers will have VMs on the same physical server. The customer doesn’t have any idea who he’s sharing this pool of capacity with.

5. This includes cloud service providers only. This is an MQ for the public cloud compute IaaS providers themselves — the services focused on are ones like Amazon EC2, Terremark Enterprise Cloud, and so forth. This does not include any of the cloud-enablement vendors (no Eucalyptus, etc.), nor does it include any of the vendors in the ecosystem (no RightScale, etc.).

6. The target audience for this new MQ is still the same as the existing MQ. As Gartner analysts, we write for our client base. These are corporate IT buyers in mid-sized businesses or enterprises, or technology companies of any size (generally post-funding or post-revenue, i.e., at the stage where they’re looking for serious production infrastructure). We expect to weight the scoring heavily towards the requirements of organizations who need a dependable cloud, but we also recognize the value of commodity cloud to our audience, for certain use cases.

At this point, the initial vendor surveys for this MQ have been sent out. They have gone out to every vendor who requested one, so if you did not get one and you wanted one, please send me email. We did zero pre-qualification; if you asked, you got it. This is a data-gathering exercise, where the data will be used to determine which vendors get a formal invitation to participate in the research. We do not release the qualification criteria in advance of the formal invitations; please do not ask.

If you’re a vendor thinking of requesting a survey, please consider the above. Are you a cloud infrastructure service provider, not a cloud-building vendor or a consultancy? Is your cloud compute massively multi-tenant? Is it highly automated and focused on self-service? Do you serve enterprise customers and actively compete for enterprise deals, globally? If the answers to any of these questions are “no”, then this is not the MQ for you.